Transformations with Airbyte (Part 3/3)

Overview

This tutorial will describe how to push a custom dbt transformation project back to Airbyte to use during syncs.

This guide is the last part of the tutorial series on transformations, following Transformations with SQL and connecting EL with T using dbt.

(Example outputs are updated with Airbyte version 0.23.0-alpha from May 2021)

Transformations with Airbyte

After replication of data from a source connector (Extract) to a destination connector (Load), multiple optional transformation steps can now be applied as part of an Airbyte Sync. Possible workflows are:

- Basic normalization transformations as automatically generated by Airbyte dbt code generator.

- Customized normalization transformations as edited by the user (the default generated normalization one should therefore be disabled)

- Customized business transformations as specified by the user.

Public Git repository

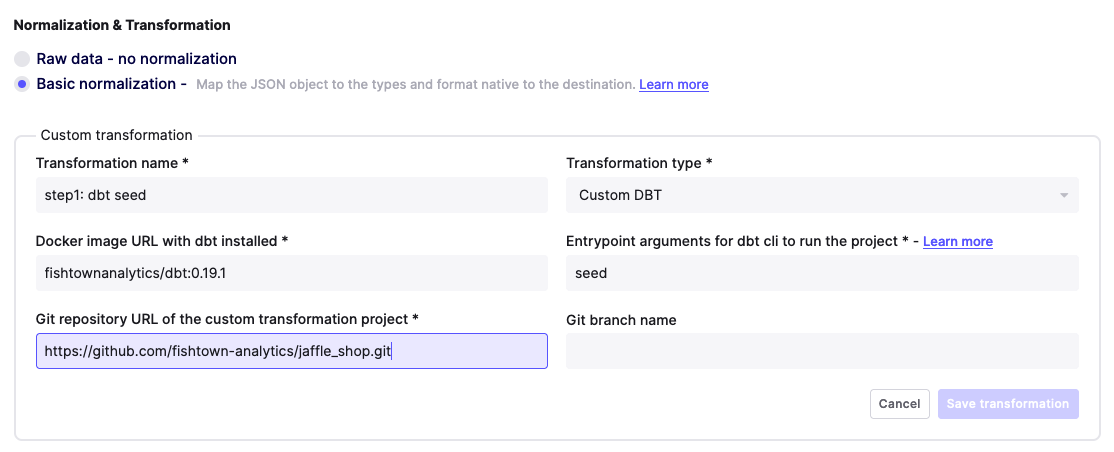

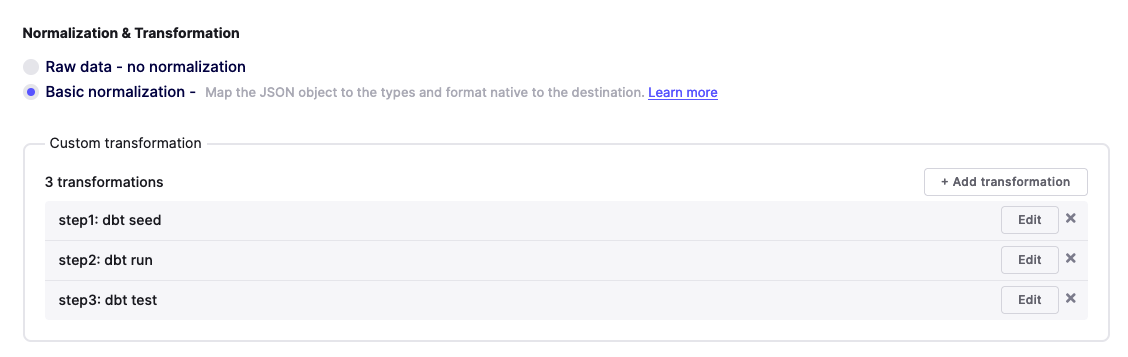

In the connection settings page, I can add new Transformations steps to apply after normalization. For example, I want to run my custom dbt project jaffle_shop, whenever my sync is done replicating and normalizing my data.

You can find the jaffle shop test repository by clicking here.

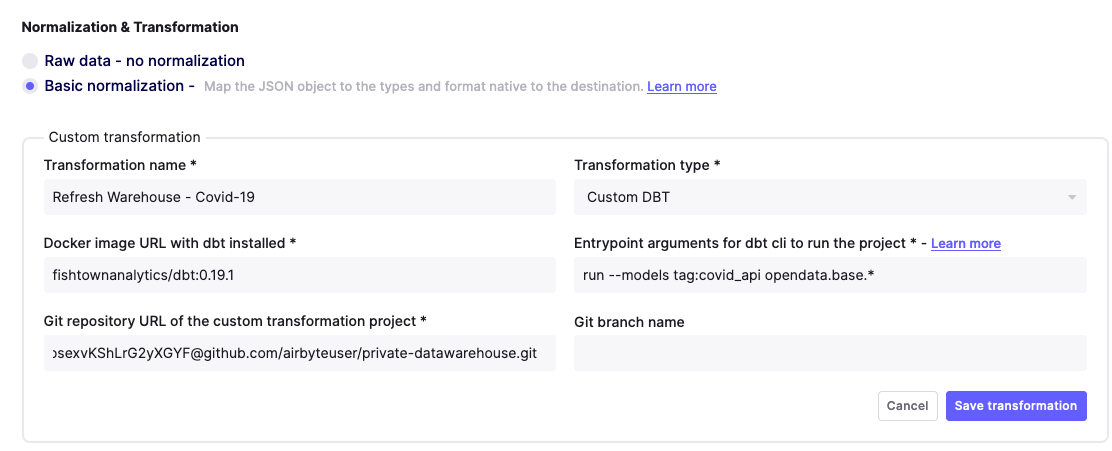

Private Git repository

Now, let's connect my mono-repo Business Intelligence project stored in a private git repository to update the related tables and dashboards when my Airbyte syncs complete.

Note that if you need to connect to a private git repository, the recommended way to do so is to generate a Personal Access Token that can be used instead of a password. Then, you'll be able to include the credentials in the git repository url:

And then use it for cloning:

git clone https://username:token@github.com/user/repo

Where https://username:token@github.com/user/repo is the git repository url.

Example of a private git repo used as transformations

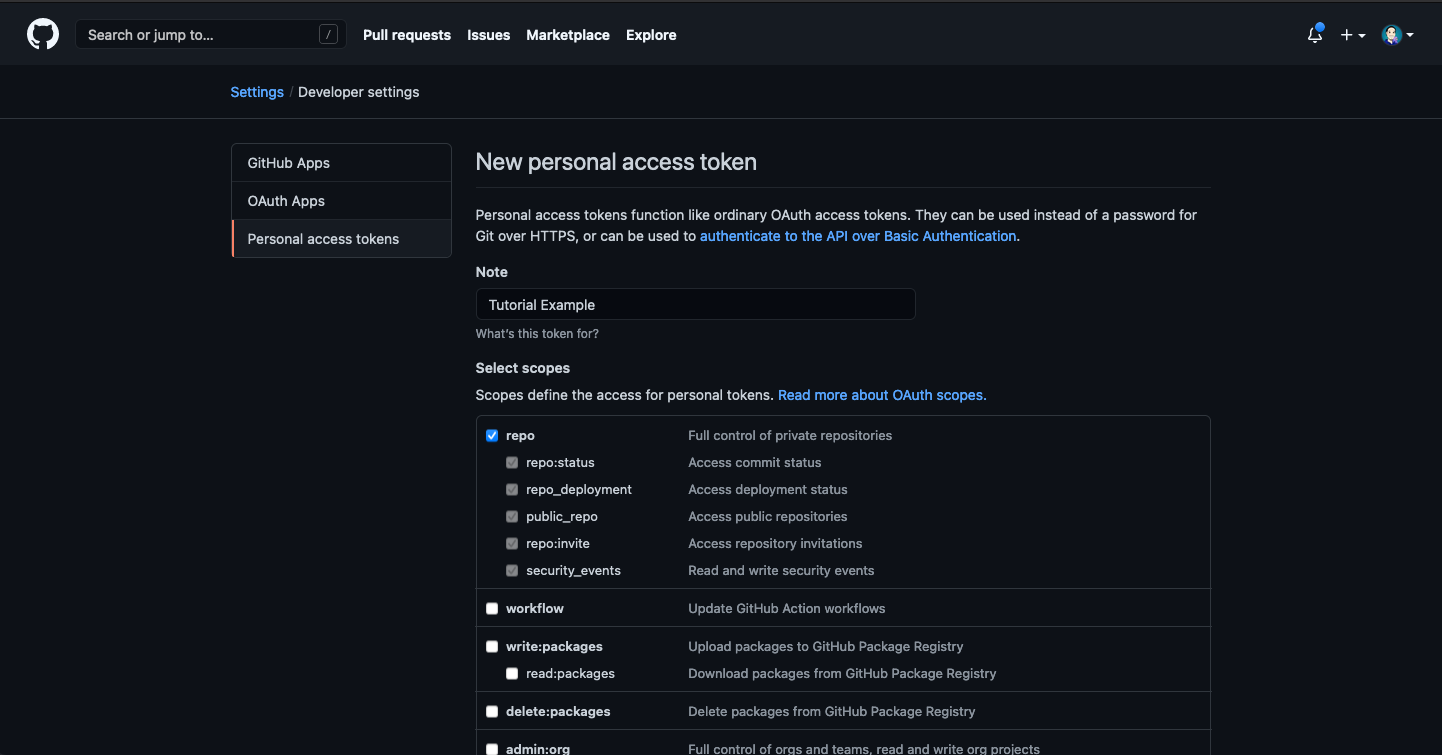

As an example, I go through my GitHub account to generate a Personal Access Token to use in Airbyte with permissions to clone my private repositories:

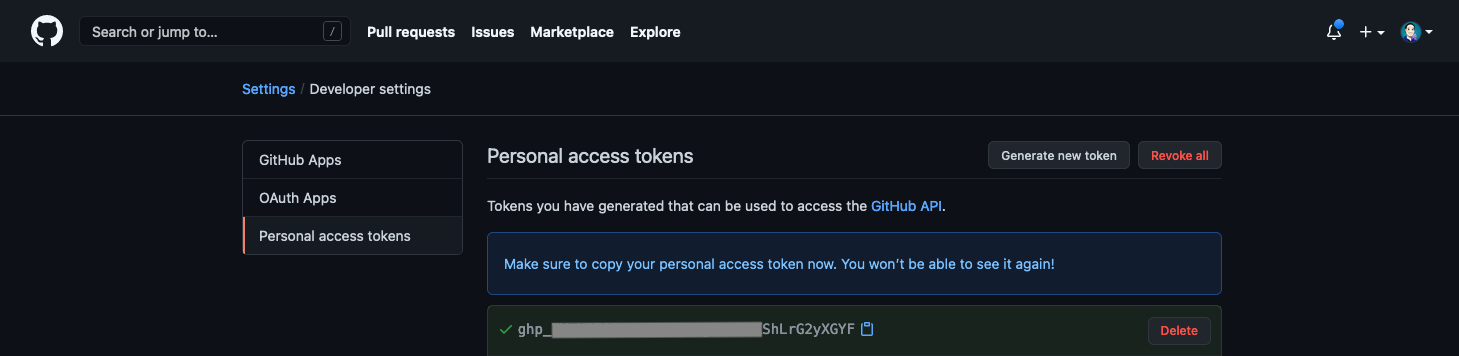

This provides me with a token to use:

In Airbyte, I can use the git url as: https://airbyteuser:ghp_***********ShLrG2yXGYF@github.com/airbyteuser/private-datawarehouse.git

How-to use custom dbt tips

Allows "chained" dbt transformations

Since every transformation leave in his own Docker container, at this moment I can't rely on packages installed using dbt deps for the next transformations.

According to the dbt documentation, I can configure the packages folder outside of the container:

# dbt_project.yml

packages-install-path: '../dbt_packages'

If I want to chain dbt deps and dbt run, I may use dbt build instead, which is not equivalent to the two previous commands, but will remove the need to alter the configuration of dbt.

Refresh models partially

Since I am using a mono-repo from my organization, other team members or departments may also contribute their dbt models to this centralized location. This will give us many dbt models and sources to build our complete data warehouse...

The whole warehouse is scheduled for full refresh on a different orchestration tool, or as part of the git repository CI. However, here, I want to partially refresh some small relevant tables when attaching this operation to a specific Airbyte sync, in this case, the Covid dataset.

Therefore, I can restrict the execution of models to a particular tag or folder by specifying in the dbt cli arguments, in this case whatever is related to "covid_api":

run --models tag:covid_api opendata.base.*

Now, when replications syncs are triggered by Airbyte, my custom transformations from my private git repository are also run at the end!

Using a custom run with variables

If you want to use a custom run and pass variables you need to use the follow syntax:

run --vars '{"table_name":"sample","schema_name":"other_value"}'

This string must have no space. There is a Github issue to improve this. If you want to contribute to Airbyte, this is a good opportunity!

DBT Profile

There is no need to specify --profiles-dir. By default AirByte based on the destination type. For example, if you're using Postgres as your destination, Airbyte will create a profile configuration based on that destination. This means you don't need to specify the credentials. If you specify a custom profile file, you are responsible for securely managing the credentials. Currently, we don't have a way to manage and pass secrets and it's recommended you let Airbyte pass this to dbt.